What is zero-copy?

Efficiency is a crucial factor that determines system performance in the modern digital environment, which is marked by exponential data expansion. Conventional data transfer methodologies, which necessitate the central processing unit (CPU) to execute multiple data copy operations, impose significant overhead, thereby impeding performance. The concept of ‘zero-copy’ emerges as a pivotal optimization strategy, designed to mitigate these inefficiencies by eliminating redundant data replication within the system. This paradigm change eliminates the need for the CPU to act as an intermediary and significantly lowers latency by enabling direct data flow between external devices and memory.

The traditional approach to data transfer, involving iterative data copying between kernel and user contexts, wastes CPU cycles and memory bandwidth.

In data-intensive applications, this inefficiency is especially noticeable. Zero-copy methods minimize CPU intervention by allowing data to be transmitted directly through the use of mechanisms like memory mapping and Direct Memory Access (DMA). This results in substantial performance enhancements, facilitating greater throughput and reduced latency. The basics of zero-copy will be explained in this article, along with its advantages, uses, and supporting technology. Examining this optimization method will show how it can greatly improve workflows for data transfering.

Key Terminology#

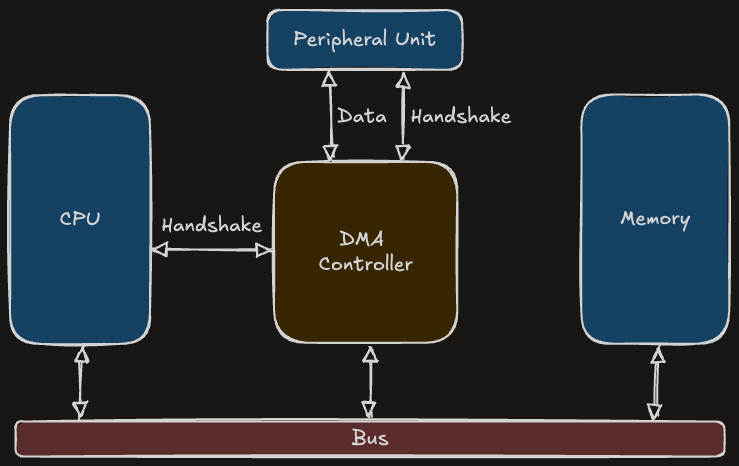

Direct Memory Access (DMA): DMA lets hardware transfer data directly to and from memory, bypassing the CPU for faster I/O. Direct Memory Access (DMA) main purpose is to relieve the CPU of the strain associated with data transfer activities. To help with this operation, a specialized DMA controller is used. The CPU initializes the transfer by providing the controller with essential parameters, such as source and destination addresses and data size. The transfer is then carried out by the DMA controller on its own without additional CPU assistance. The controller notifies the CPU that the task has ended when the data transfer is complete.

Context-switching: Context switching is when the CPU quickly switches between tasks by saving and loading their states. During context switching; the operating system saves the current state of the running process. Then, depending on some conditions (such as priority, waiting time, resource availability), a new process is selected to be run. The saved states of the selected process are loaded into the CPU. Finally, the new process is continued on the CPU.

To understand the traditional and zero-copy approach, let’s consider a scenario:

Reading data from a file and transferring that data to another program over the network

Data transfer: The tradional approach#

The traditional method is conceptually simple:

Socket socket = new Socket(SERVER_HOST, SERVER_PORT);

FileInputStream fileInputStream = new FileInputStream(FILE_PATH);

while ((bytesRead = fileInputStream.read(buffer)) != -1) {

socket.getOutputStream().write(buffer, 0, bytesRead);

}

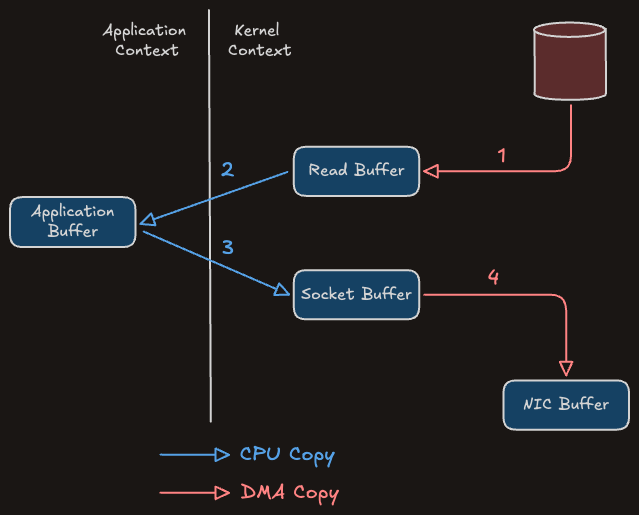

When copying with tradional approach, 4 switches are performed in the kernel and user context and the data is copied 4 times. The following image shows how the data is moved internally from the file to the socket:

In the traditional data transfer approach, multiple context switches and redundant data copies occur between user mode and kernel mode, leading to inefficiencies. The steps involved in the image are as follows:

- During the

read()call, the system callssys_read(), triggering the DMA to read the file data from disk into a kernel buffer. A context switch occurs between the user and the kernel. - The requested data is copied from the read buffer into the user buffer. A context switch occurs from kernel to user mode.

- When the

write()call is made, a context switch occurs from user mode to kernel mode. The data is copied again from the user buffer into the associated with the socket a new kernel buffer. - Kernel mode is switched back to user mode, and DMA performs a final copy, moving the data from the kernel buffer to the protocol engine and sending it over the network.

This approach can become a bottleneck when the requested data size becomes large, because the data is copied many times before being transferred. Zero-copy removes these duplicate copies of data, improving performance.

Data transfer: The zero-copy approach#

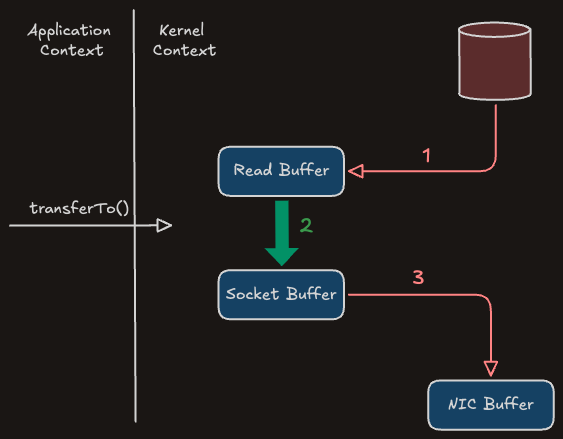

Some of the copies used in the traditional approach are not actually required. The application does nothing else except to receive the data into its buffer and transfer it back to the socket buffer. Instead, data can be transferred directly from the read buffer to the socket buffer. The transferTo() method lets you do exactly this.

InetSocketAddress address = new InetSocketAddress(SERVER_HOST, SERVER_PORT);

SocketChannel socketChannel = SocketChannel.open(address);

FileChannel fileChannel = new FileInputStream(FILE_PATH).getChannel();

fileChannel.transferTo(0, fileChannel.size(), socketChannel);

Data can be moved from a file channel to a writable byte channel using the transferTo() method. It depends on the operating system’s ability to support zero copies. It internally employs the sendfile() system call on Linux and UNIX, which avoids needless copying by transferring data directly between file descriptors.

#include <sys/sendfile.h>

ssize_t sendfile(int out_fd, int in_fd, off_t *_Nullable offset, size_t count);

Source: Linux manual page

In a zero-copy approach, the transferTo() method improves efficiency by reducing the number of context switches and data copies. The steps involved in the image are as follows:

- The DMA engine first copies the contents of the file into a kernel buffer, after which the kernel transfers the data to the output socket buffer.

- As the DMA engine transfers the data from the kernel socket buffers to the protocol engine, the third copy takes place.

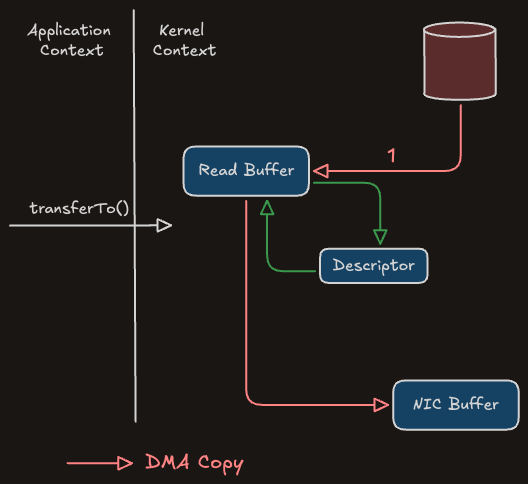

As a result, this is an improvement. There are now just two context switches and three data copies instead of four. But this does not yet achieve the goal of zero copies. In Linux 2.4 and later, the kernel uses gather procedures enabled by the Network Interface Card (NIC) to further reduce data duplication in order to reach zero-copy. This technique eliminates redundant data copies that use the CPU in addition to minimizing the number of context switches. The underlying procedure has been optimized, however the user-side functionality has not changed.

The DMA engine is used by the transferTo() method to copy file data into a kernel buffer. Only descriptors containing the location and length of the data are added, rather than actual data being copied into the socket buffer. The final CPU copy is then removed when the DMA engine moves the data straight from the kernel buffer to the protocol engine.

Comparison: Traditional vs Zero-copy#

| Feature | Traditional Approach | Zero-Copy |

|---|---|---|

| CPU Usage | High (multiple copies) | Low (direct transfer) |

| Memory Bandwidth | High | Low |

| Context Switches | More frequent | Reduced |

| Performance for Large Files | Slower | Faster |

The source code for this post is available in my GitHub repository. Feel free to explore and contribute.

Thanks for reading, I hope this post gave you a good look at the traditional and zero-copy methods underlying data transfer.